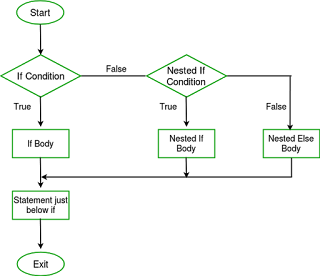

How “nested Else” creates #bias and the impact on automated decision making

On Page 191 John explores the Else Test

----

At a simple level a nested Python If; Else statement can

look like the code below. This is beautiful in its simplicity and offers a repeatable

and deterministic way to match a grade to the logical number of the mark

obtained. In each case there is one

output; based on the actual input mark.

Happy days

if grade >= 90:

print("A grade")

elif grade >=80:

print("B grade")

elif grade >=70:

print("C grade")

elif grade >= 65:

print("D grade")

else:

print("Failing grade")

Let’s change the case slightly to something which says has

more difficult to answer. “Are you are

good parent?” We can approach the problem

in two ways. The simple way that hides the

complexity and based on a score which determines if you are a good parent (code

below)

if grade >= 90:

print("A grade Parent")

elif grade >=80:

print("B grade Parent ")

elif grade >=70:

print("C grade Parent ")

elif grade >= 65:

print("D grade Parent ")

else:

print("Failing grade Parent ")

The astute see that we now need to get under the skin of who,

how and what was used to create the grading number and how who determined the

boundaries. And this is where bias occurs,

in the unseen factors of the decision making

However, we can take another approach which opens up the

idea wider.

if “did you give your kids sweets” == TRUE:

if “was it a reward” == TRUE;

THEN

{

propensity_Parent_being_good = +1

}

elif “was it a bribe” == TRUE;

THEN

{

propensity_Parent_being_good = -1

}

else “unsure of propose” == TRUE;

THEN

{

propensity_Parent_being_good = 0

}

So why interesting.

You can only have so many nested If statements before the code becomes slow to

run and of poor quality. Depending on

the tests you perform (which depends on the data you have access to) determines

your decision. Who determines the questions and the levels provides a bias and

the bias is there to create value for the company making the calculation. What it means is that our very comput language

and structures create a bias.

Further reading

Coding Tips with Bias https://medium.com/@ritidass29/coding-tips-to-subdue-psychological-bias-1c10b23b3b3e

Pew Report Bias in systems https://www.pewinternet.org/2017/02/08/theme-4-biases-exist-in-algorithmically-organized-systems/

Algorithms Are Not Inherently Biased https://www.datadriveninvestor.com/2019/04/08/algorithms/